I am an Assistant Professor in ECE at UCLA, where I direct the Human-Centered Computing and Intelligent Sensing Lab (HiLab). My research develops new perceptual and interactive capabilities for devices embedded in our physical environments. Together, these systems advance practical, inclusive, and sustainable ambient intelligence that supports people in real-world tasks. Recently, my work has expanded beyond sensing to include actuation and inference, leveraging advances in language models to enable applications in the Internet of Things, personal informatics, and accessibility. A taxonomy of my research appears below.

[Research focus diagram inspired by professor Bjoern Hartmann ]

Research

Please refer to HiLab's webpage for more recent work

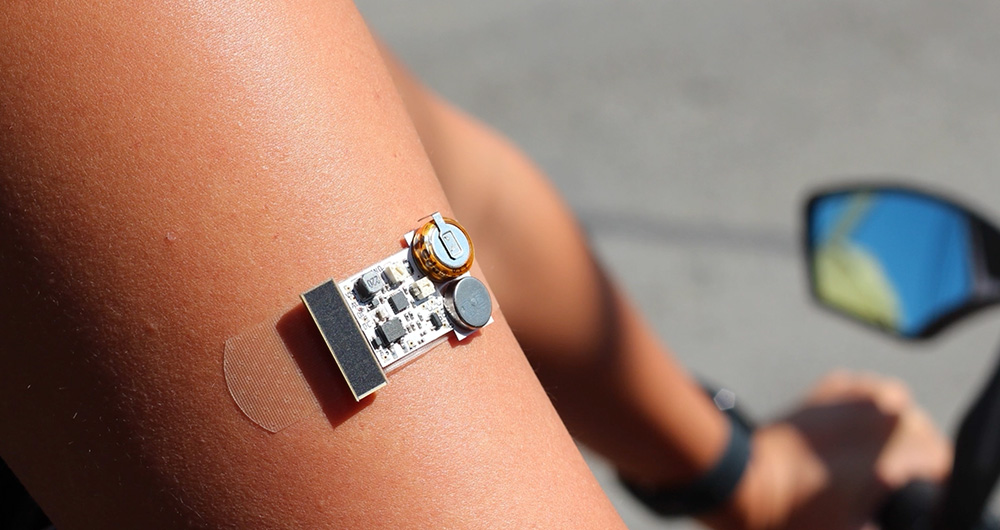

Hapt-Aids: Self-Powered, On-Body Haptics for Activity Monitoring

Xiaoying Yang, Vivian Shen, Chris Harrison, Yang Zhang (IMWUT 2025)

[Video]

[DOI]

[PDF]

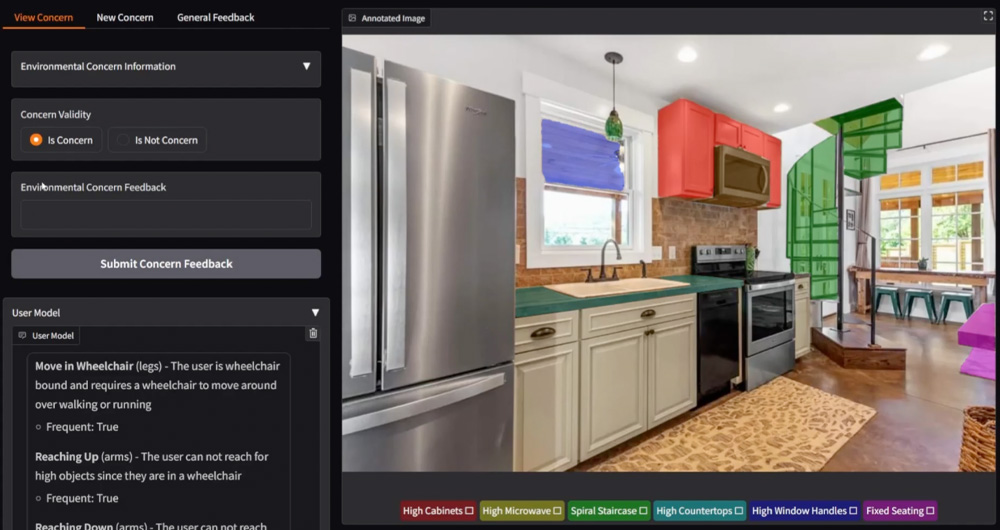

Accessibility Scout: Personalized Accessibility Scans of Built Environments

William Huang, Xia Su, Jon E. Froehlich, Yang Zhang (UIST 2025)

[Video]

[DOI]

[PDF]

[Github]

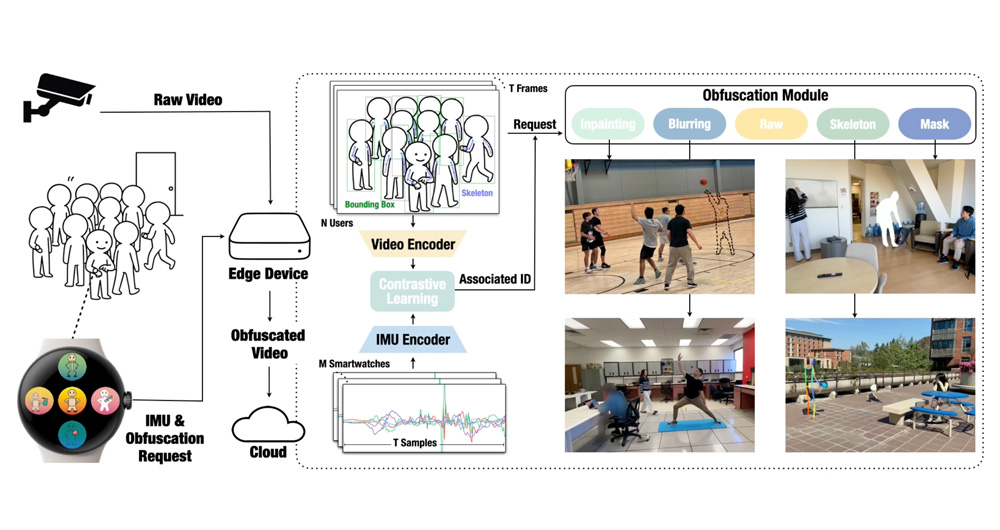

Invisibility Cloak: Personalized Smartwatch-Guided Camera Obfuscation

Xue Wang, Yang Zhang (UIST 2025)

[Video]

[DOI]

[PDF]

[Github]

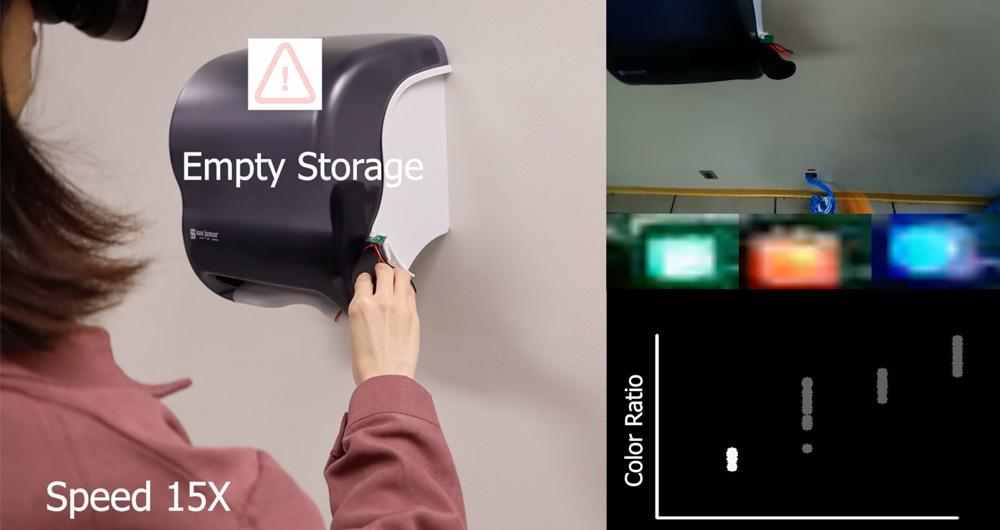

LuxAct: Enhance Everyday Objects with Interaction-Powered Illumination for Visual Communication

Xiaoying Yang, Qian Lu, Jeeeun Kim, Yang Zhang (UIST 2025)

[Video]

[DOI]

[PDF]

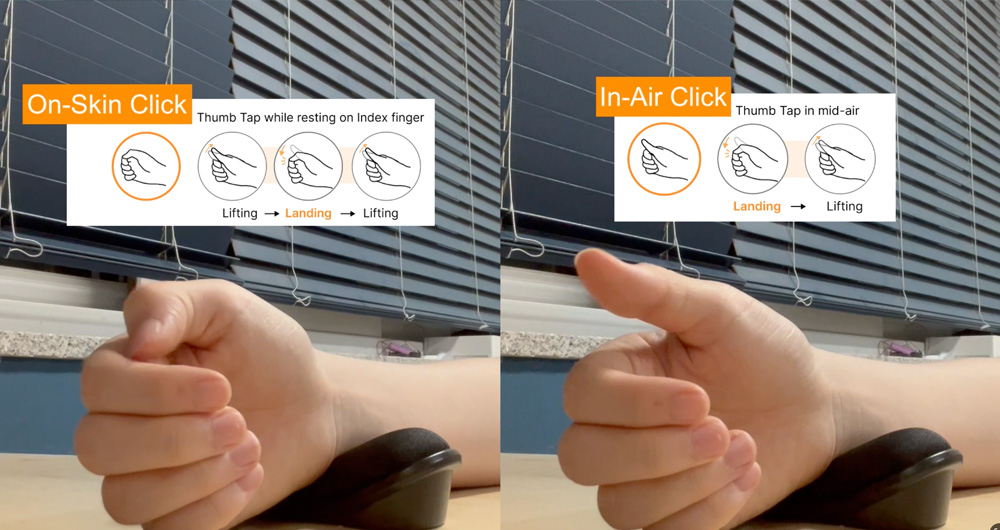

T2IRay: Design of Thumb-to-Index based Indirect Pointing for Continuous and Robust AR/VR Input

LumosX: 3D Printed Anisotropic Light-Transfer

Qian Lu, Xiaoying Yang, Xue Wang, Jacob Sayono, Yang Zhang, Jeeeun Kim (CHI 2025)

[DOI]

[PDF]

EchoSight: Streamlining Bidirectional Virtual-physical Interaction with In-situ Optical Tethering

Jingyu Li, Qingwen Yang, Kenuo Xu, Yang Zhang, Chenren Xu (CHI 2025)

[DOI]

[PDF]

Haptic Artificial Muscle Skin for Extended Reality

Yuxuan Guo, Yang Luo, Roshan Plamthottam, Siyou Pei, Chen Wei, Ziqing Han, Jiacheng Fan, Mason Possinger, Kede Liu, Yingke Zhu, Zhangqing Fei, Isabelle Winardi, Hyeonji Hong, Yang Zhang, Lihua Jin, Qibing Pei (Science Advances 2024)

[DOI]

[PDF]

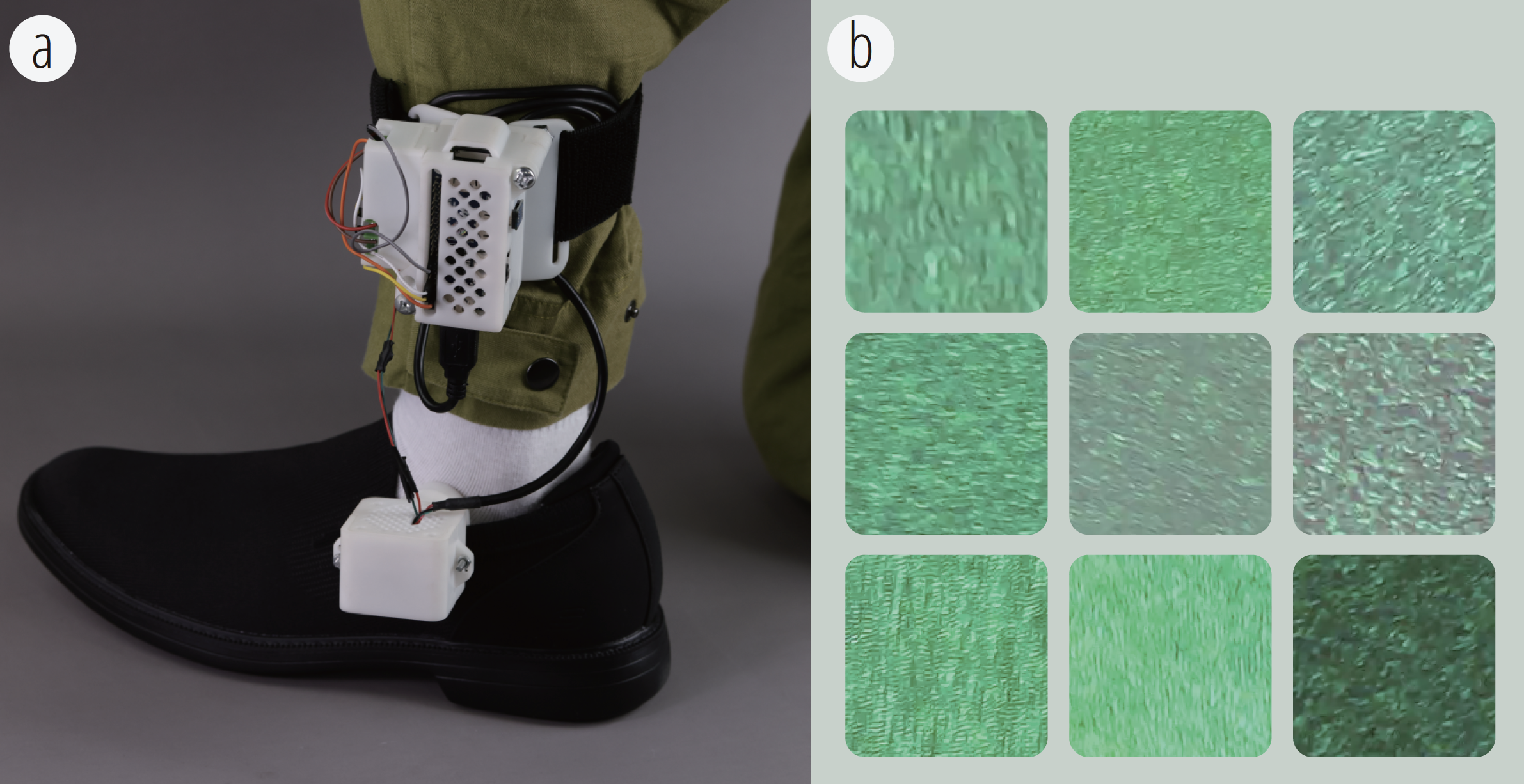

TextureSight: Texture Detection for Routine Activity Awareness with Wearable Laser Speckle Imaging

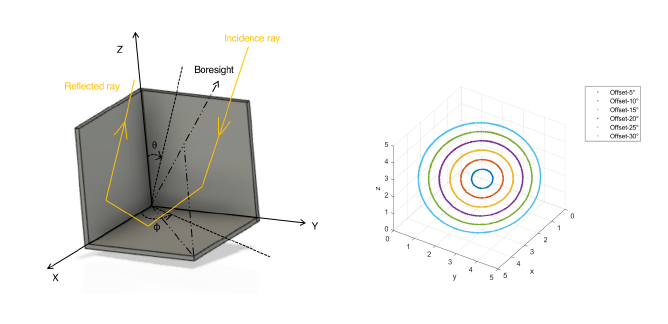

CubeSense++: Smart Environment Sensing with Interaction-Powered Corner Reflector Mechanisms

Xiaoying Yang, Jacob Sayono, Yang Zhang (UIST 2023)

[Video]

[DOI]

[PDF]

Headar: Sensing Head Gestures for Confirmation Dialogs on Smartwatches with Wearable Millimeter-Wave Radar

Xiaoying Yang, Xue Wang, Gaofeng Dong, Zihan Yan, Mani Srivastava, Eiji Hayashi, Yang Zhang (IMWUT 2023)

[Video]

[DOI]

[PDF]

Embodied Exploration: Facilitating Remote Accessibility Assessment for Wheelchair Users with Virtual Reality

Siyou Pei, Alexander Chen, Chen Chen, Mingzhe "Franklin" Li, Megan Fozzard, Hao-Yun Chi, Nadir Weibel, Patrick Carrington, Yang Zhang (ASSETS 2023)

[Video]

[DOI]

[PDF]

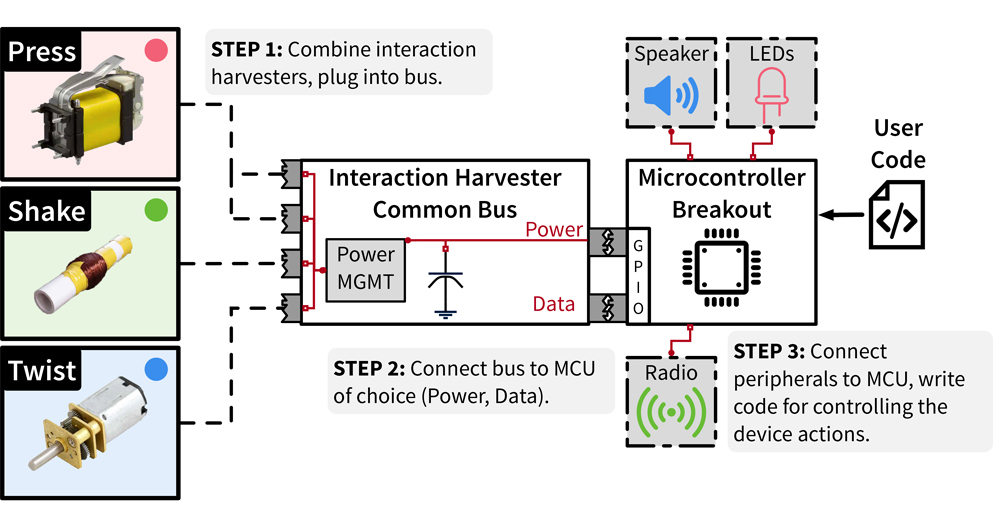

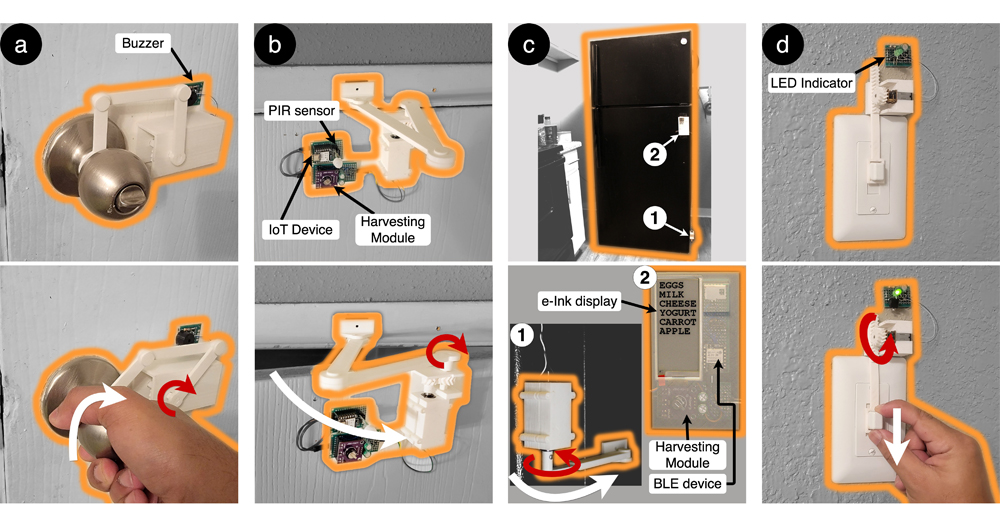

Interaction Harvesting: A Design Probe of User-Powered Widgets

John Mamish, Amy Guo, Thomas Cohen, Julian Richey, Yang Zhang, Josiah Hester (IMWUT 2023)

[DOI]

[PDF]

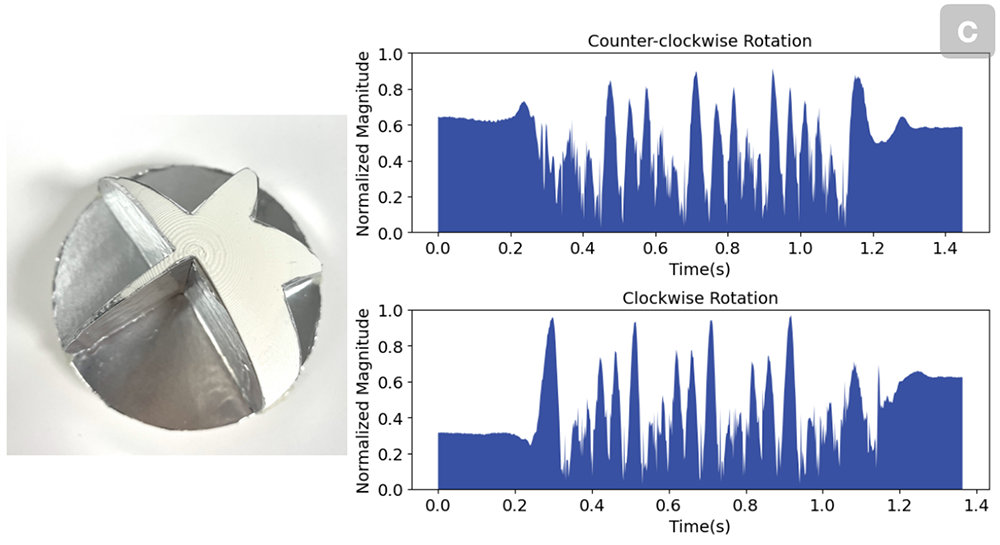

E3D: Harvesting Energy from Everyday Kinetic Interactions Using 3D Printed Attachment Mechanisms

Abul Al Arabi, Xue Wang, Yang Zhang, Jeeeun Kim (IMWUT 2023)

[DOI]

[PDF]

Watch Your Mouth: Silent Speech Recognition with Depth Sensing Honorable Mention Award

Xue Wang, Zixiong Su, Jun Rekimoto, Yang Zhang (CHI 2024)

[Video]

[DOI]

[PDF]

[Github]

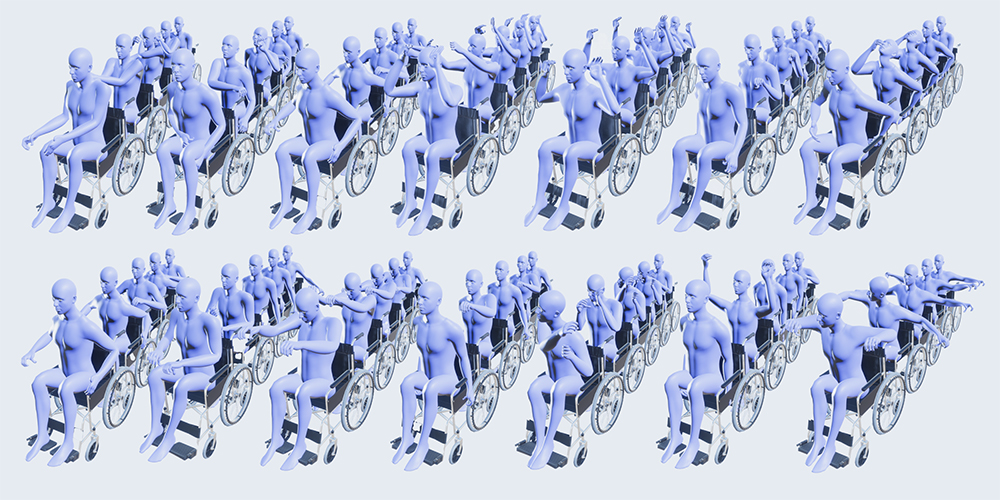

WheelPose: Data Synthesis Techniques to Improve Pose Estimation Performance on Wheelchair Users

William Huang, Sam Ghahremani, Siyou Pei, Yang Zhang (CHI 2024)

[Video]

[DOI]

[PDF]

[Github]

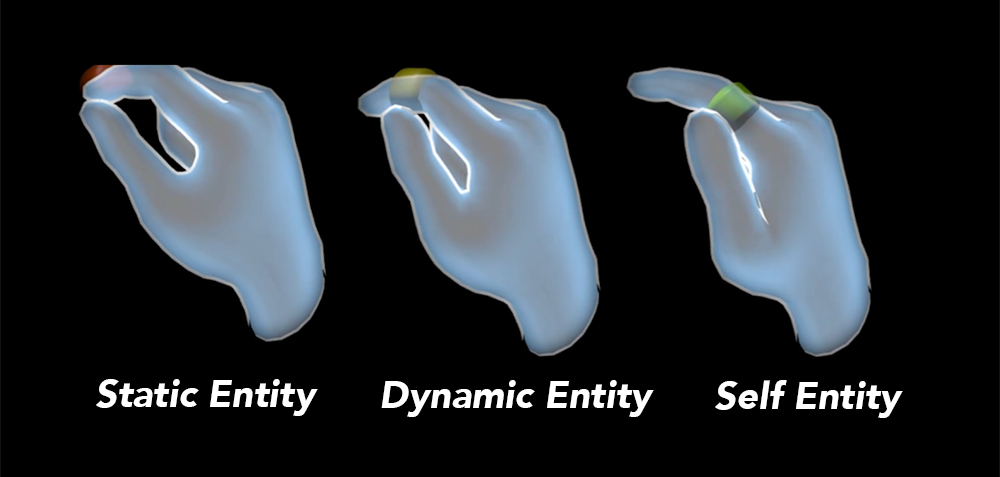

UI Mobility Control in XR: Switching UI Positionings between Static, Dynamic, and Self Entities

Siyou Pei, David Kim, Alex Olwal, Yang Zhang, Ruofei Du (CHI 2024)

[Video]

[DOI]

[PDF]

Bring Environments to People – A Case Study of Virtual Tours in Accessibility Assessment for People with Limited Mobility

Hao-Yun Chi, Jingzhen 'Mina' Sha, Yang Zhang (W4A 2023)

[Slides]

[DOI]

[PDF]

LaserShoes: Low-Cost Ground Surface Detection Using Laser Speckle Imaging

Zihan Yan, Yuxiaotong Lin, Guanyun Wang, Yu Cai, Peng Cao, Haipeng Mi, Yang Zhang (CHI 2023)

[Video]

[Slides]

[DOI]

[PDF]

ForceSight: Non-Contact Force Sensing with Laser Speckle Imaging

Siyou Pei, Pradyumna Chari, Xue Wang, Xiaoying Yang, Achuta Kadambi, Yang Zhang (UIST 2022)

[Video]

[Slides]

[DOI]

[PDF]

Freedom to Choose: Understanding Input Modality Preferences of People with Upper-body Motor Impairments for Activities of Daily Living

Mingzhe Li, Xieyang Liu, Yang Zhang, Patrick Carrington (ASSETS 2022)

[DOI]

[PDF]

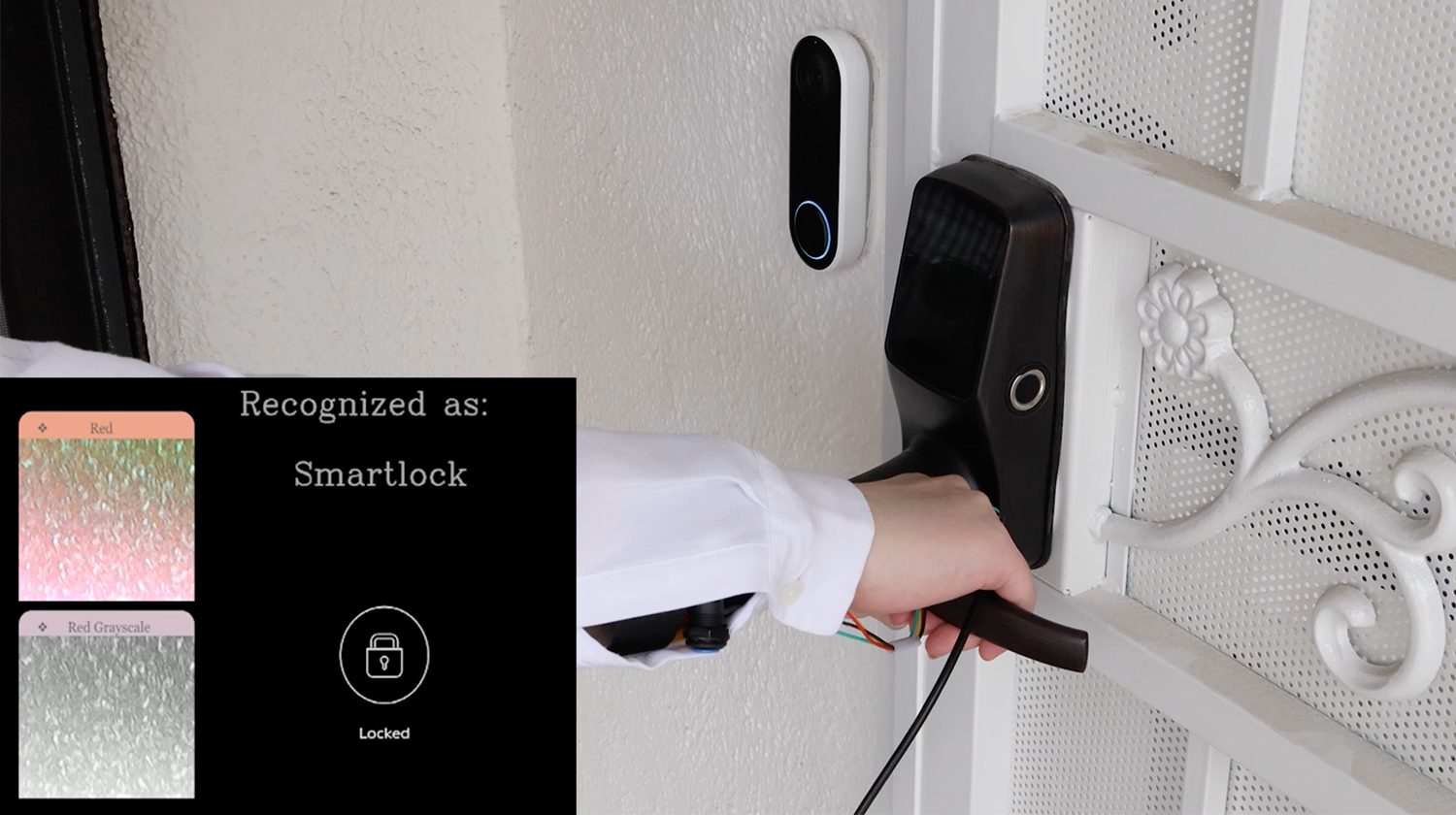

MiniKers: Interaction-Powered Smart Environment Automation

Xiaoying Yang, Jacob Sayono, Jess Xu, Jiahao "Nick" Li, Josiah Hester, Yang Zhang (IMWUT 2022)

[Slides]

[DOI]

[PDF]

[Github]

SkinProfiler: Low-Cost 3D Scanner for Skin Health Monitoring with Mobile Devices

Zhiying Li, Tejas Viswanath, Zihan Yan, Yang Zhang (MobiSys Workshop 2022)

[DOI]

[PDF]

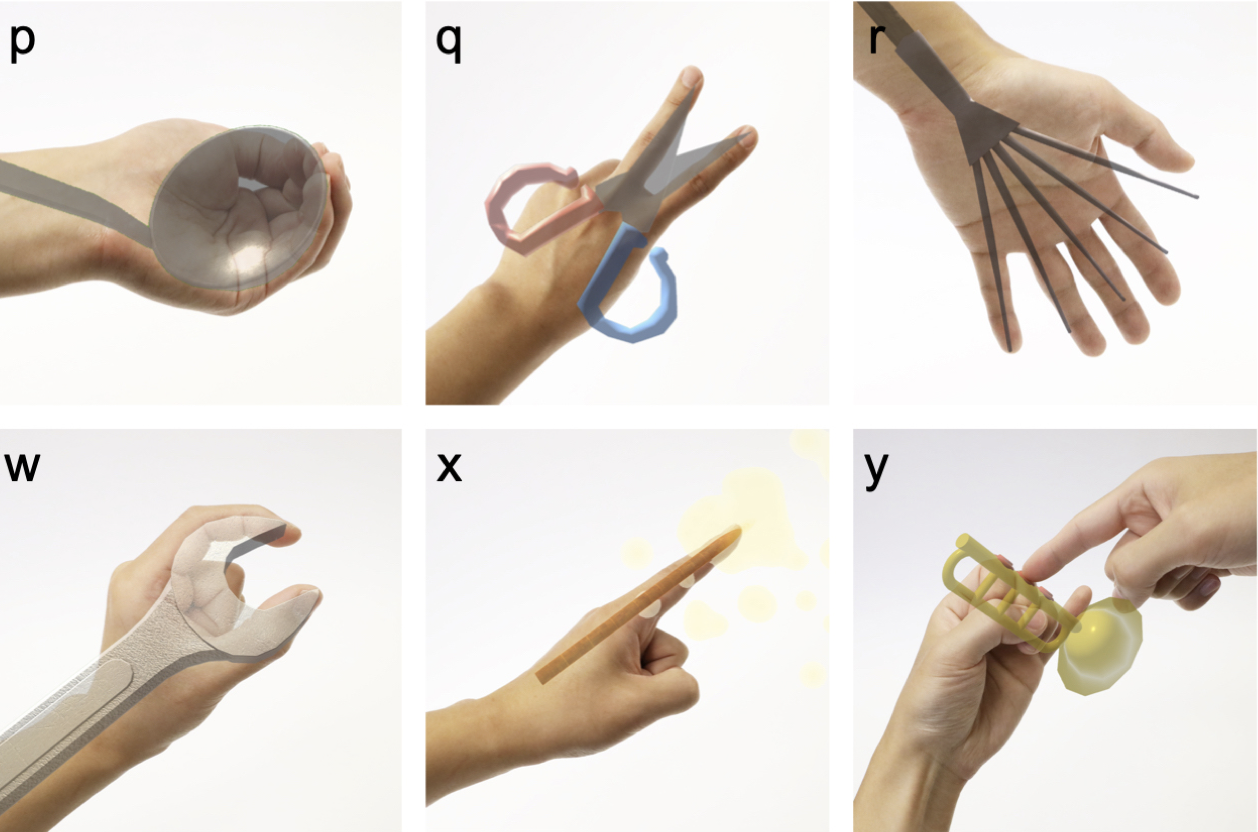

Hand Interfaces: Using Hands to Imitate Objects in AR/VR for Expressive Interactions Honorable Mention Award

Siyou Pei, Alexander Chen, Jaewook Lee, Yang Zhang (CHI 2022)

[Video]

[DOI]

[PDF]

[Github]

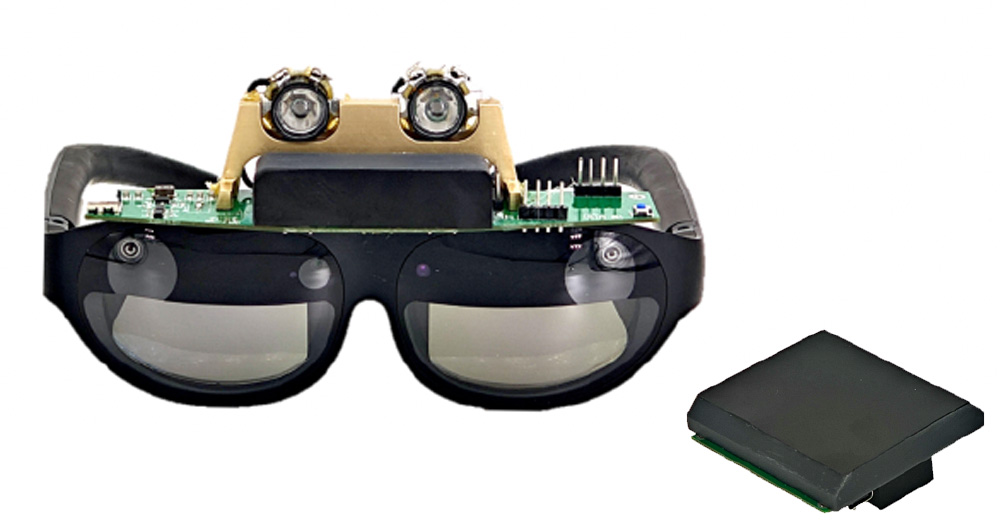

EmoGlass: an End-to-End AI-Enabled Wearable Platform for Enhancing Self-Awareness of Emotional Health

Zihan Yan, Yufei Wu, Yang Zhang, Anthony Chen (CHI 2022)

[DOI]

[PDF]

FaceBit: Smart Face Masks Platform

Alexander Curtiss, Blaine Rothrock, Abu Bakar, Nivedita Arora, Jason Huang, Zachary Englhardt, Aaron-Patrick Empedrado, Chixiang Wang, Saad Ahmed, Yang Zhang, Nabil Alshurafa, Josiah Hester (IMWUT 2021)

[DOI]

[PDF]

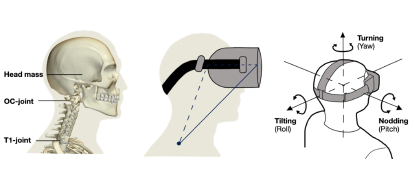

Nod to Auth: Fluent AR/VR Authentication with User Head-Neck Modeling

CubeSense: Wireless, Battery-Free Interactivity through Low-Cost Corner Reflector Mechanisms

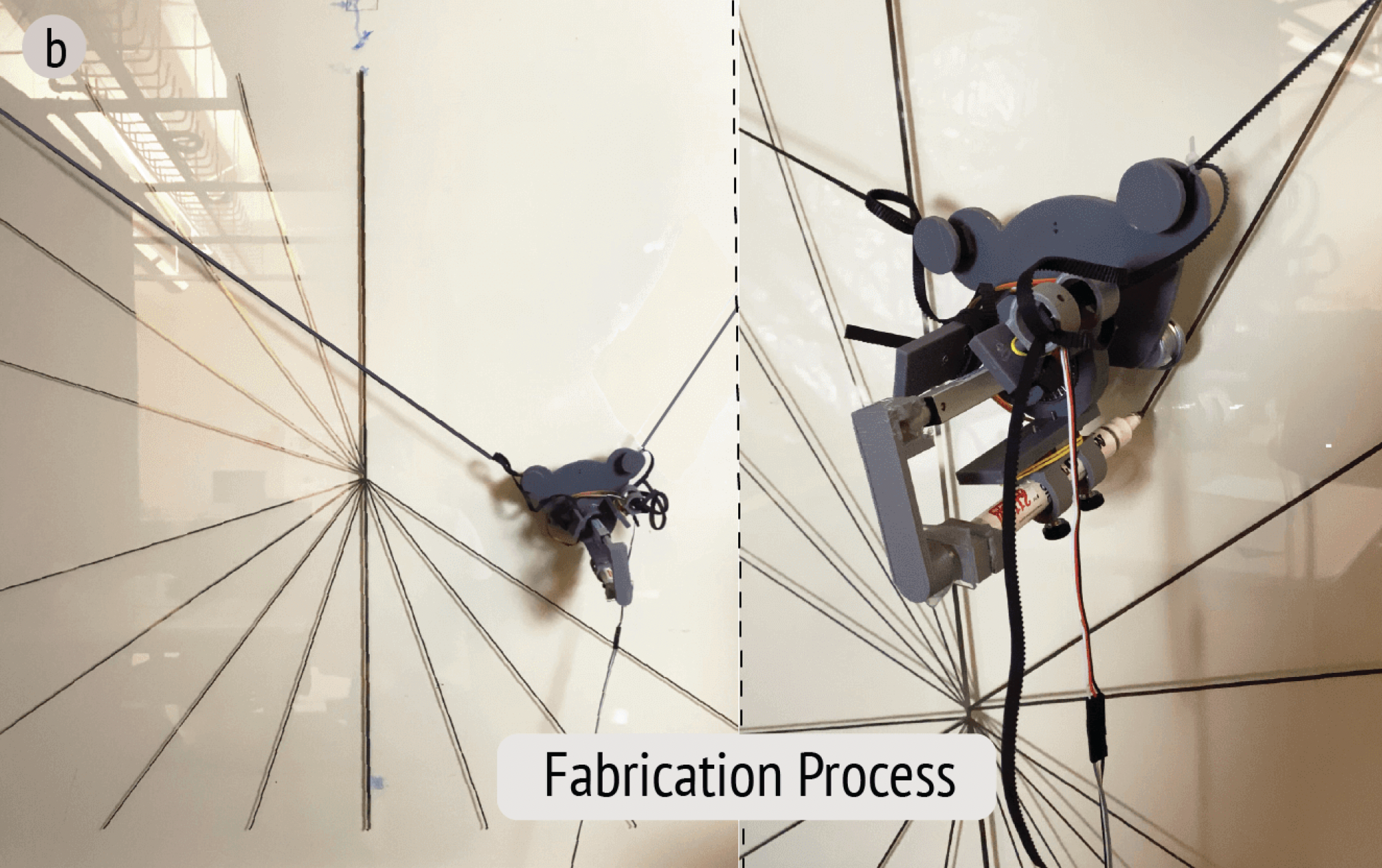

Duco: Autonomous Large-Scale Direct-Circuit-Writing (DCW) on Vertical Everyday Surfaces Using A Scalable Hanging Plotter

Tingyu Cheng, Bu Li, Yang Zhang, Yunzhi Li, Charles Ramey, Eui Min Jung, Yepu Cui, Sai Ganesh Swaminathan, Youngwook Do, Manos Tentzeris, Gregory D. Abowd, HyunJoo Oh (IMWUT 2021)

[Video]

[DOI]

[PDF]

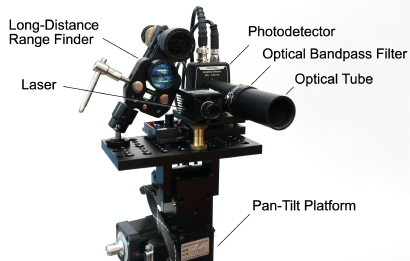

Vibrosight++: City-Scale Sensing Using Existing Retroreflective Signs and Markers

Yang Zhang, Sven Mayer, Jesse T. Gonzalez, Chris Harrison (CHI 2021)

[Video]

[DOI]

[PDF]

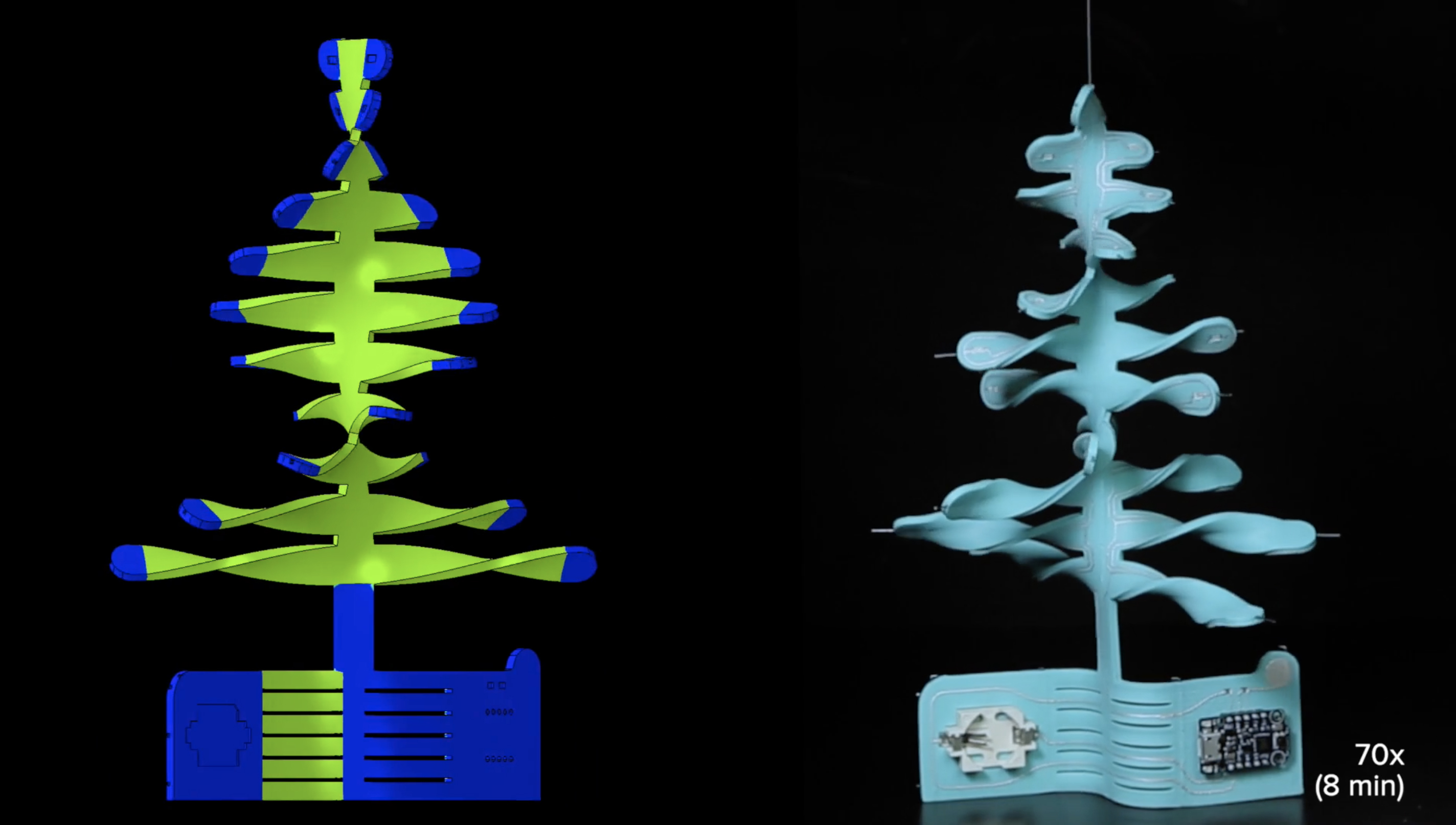

MorphingCircuit: An Integrated Design, Simulation, and Fabrication Workflow for Self-morphing Electronics

G Wang, F Qin, H Liu, Y Tao, Y Zhang, YJ Zhang, and L Yao. (IMWUT 2020)

[Video]

[DOI]

[PDF]

OptoSense: Towards Ubiquitous Self-Powered Ambient Light Sensing Surfaces

D Zhang, JW Park, Y Zhang, Y Zhao, Y Wang, Y Li, T Bhagwat, W Chou, X Jia, B Kippelen, C Fuentes-Hernandez, T Starner, and G Abowd. (IMWUT 2020)

[Video]

[DOI]

[PDF]

Wireality: Enabling Complex Tangible Geometries in Virtual Reality with Worn Multi-String Haptics

C Fang, Y Zhang, M Dworman, C Harrison (CHI 2020)

[Video]

[DOI]

[PDF]

Best Paper Award

Silver Tape: Inkjet-Printed Circuits Peeled-and-Transferred on Versatile Substrates

T Cheng, K Narumi, Y Do, Y Zhang, T Ta, T Sasatani, E Markvicka, Y Kawahara, L Yao, G Abowd, H Oh (IMWUT 2020)

[DOI]

[PDF]

Sozu: Self-Powered Radio Tags for Building-Scale Activity Sensing

Y Zhang, Y Iravantchi, H Jin, S Kumar and C Harrison (UIST 2019)

[Video]

[DOI]

[PDF]

[Code]

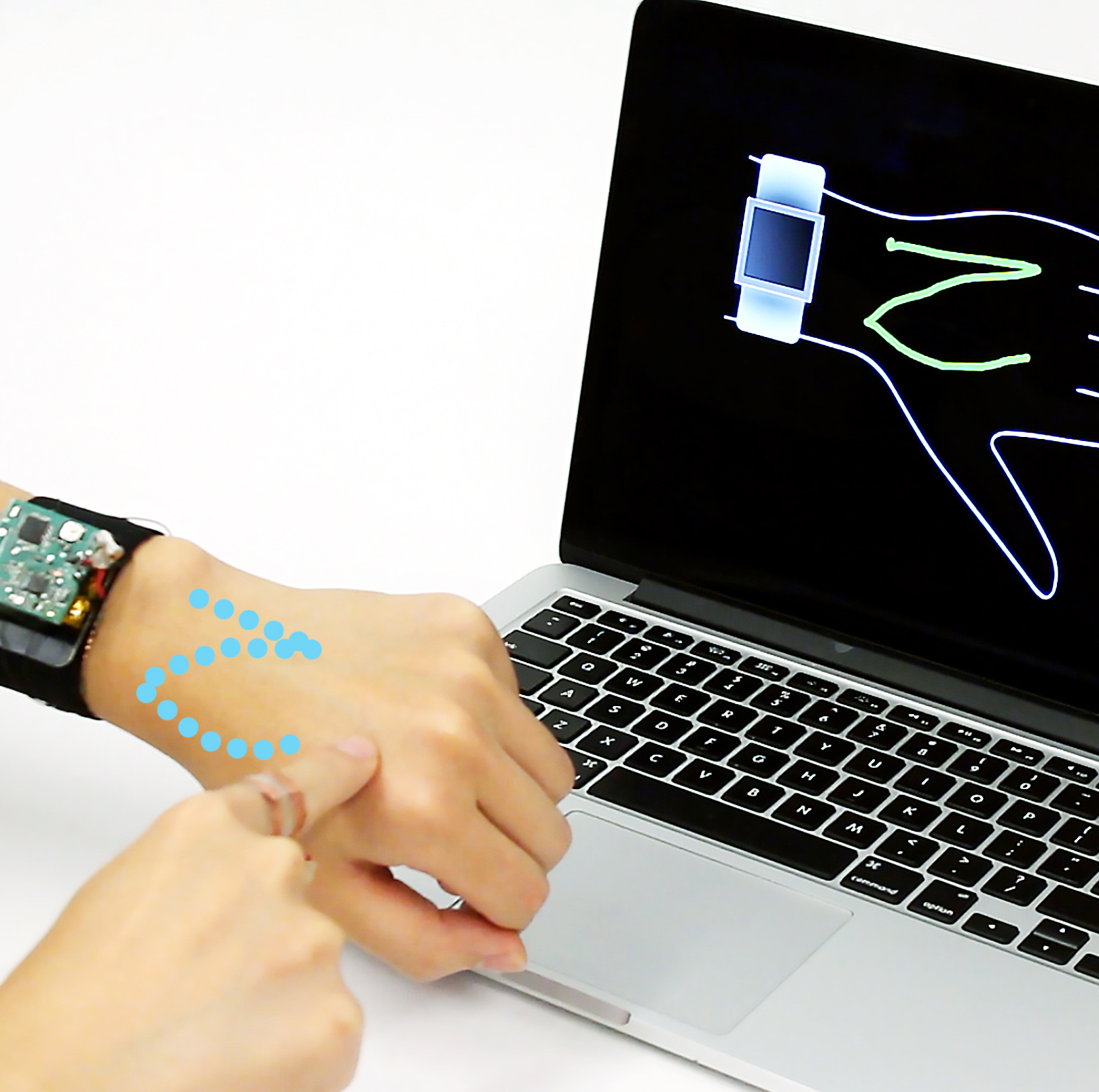

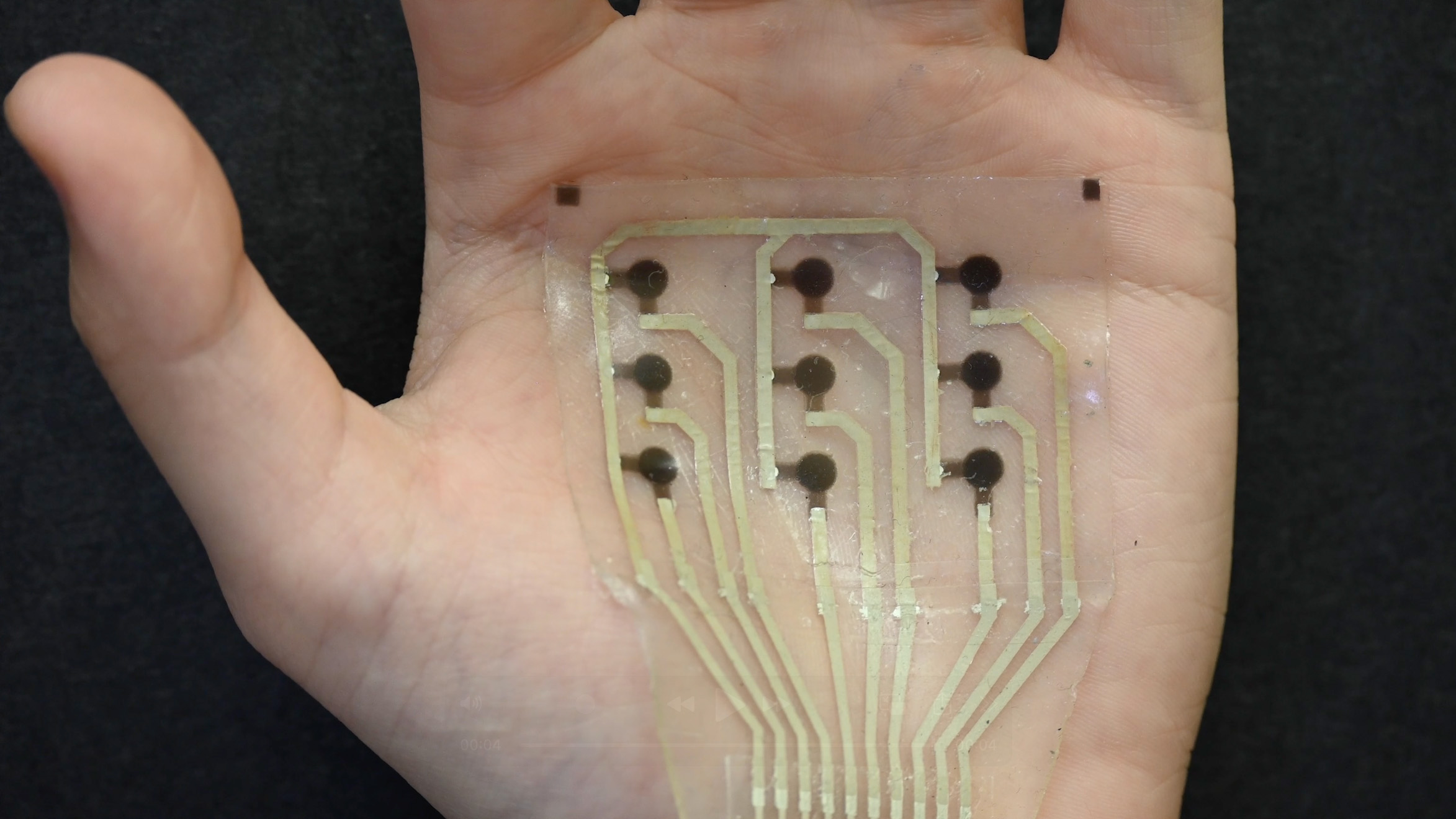

ActiTouch: Robust Touch Detection for On-Skin AR/VR Interfaces

Y Zhang, W Kienzle, Y Ma, S S. Ng, H Benko, C Harrison (UIST 2019)

[Video]

[DOI]

[PDF]

Interferi: Gesture Sensing using On-Body Acoustic Interferometry

Y Iravantchi, Y Zhang, E Bernitsas, M Goel, and C Harrison (CHI 2019)

[Video]

[DOI]

[PDF]

Honorable Mention Award

Sensing Posture-Aware Pen+Touch Interaction on Tablets

Y Zhang, M Pahud, C Holz, H Xia, G Laput, M McGuffin, X Tu, A Mittereder, F Su, W Buxton

and K Hinckley (CHI 2019)

[Video]

[DOI]

[PDF]

Honorable Mention Award

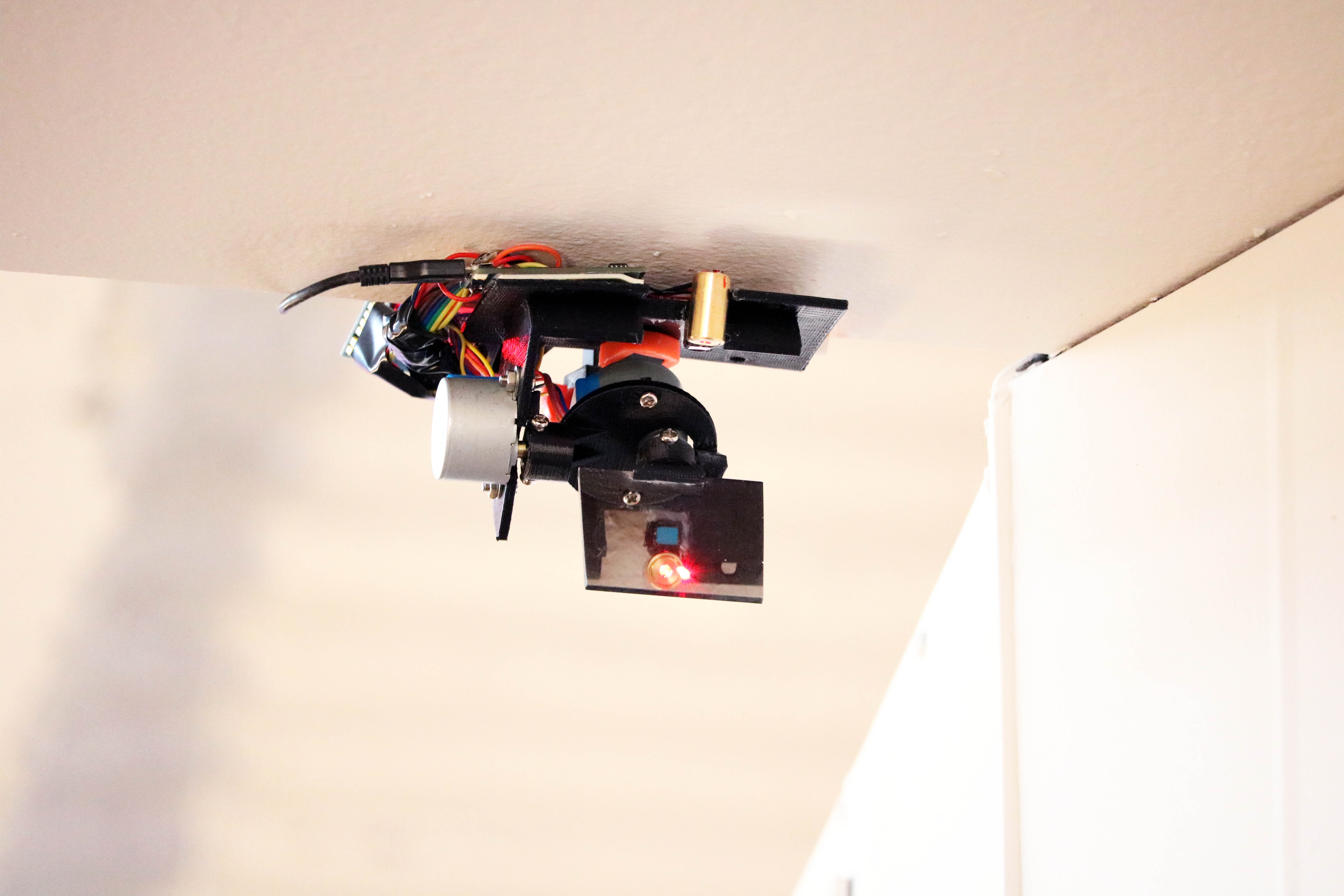

Vibrosight: Long-Range Vibrometry for Smart Environment Sensing

Y Zhang, G Laput and C Harrison (UIST 2018)

[Video]

[DOI]

[PDF]

[Code]

Honorable Mention Award

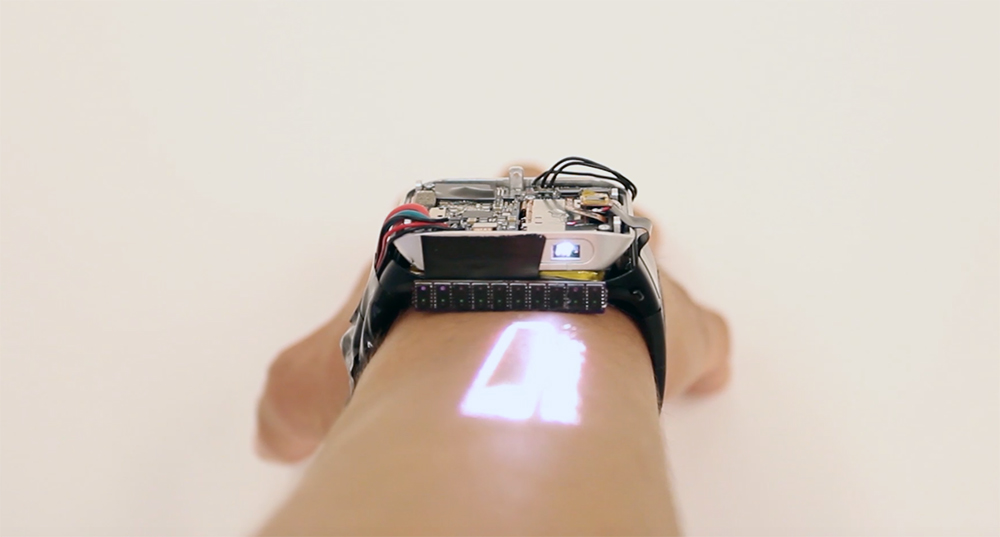

LumiWatch: On-Arm Projected Graphics and Touch Input

R Xiao, T Cao, N Guo, J Zhuo, Y Zhang and C Harrison (CHI 2018)

[Video]

[DOI]

[PDF]

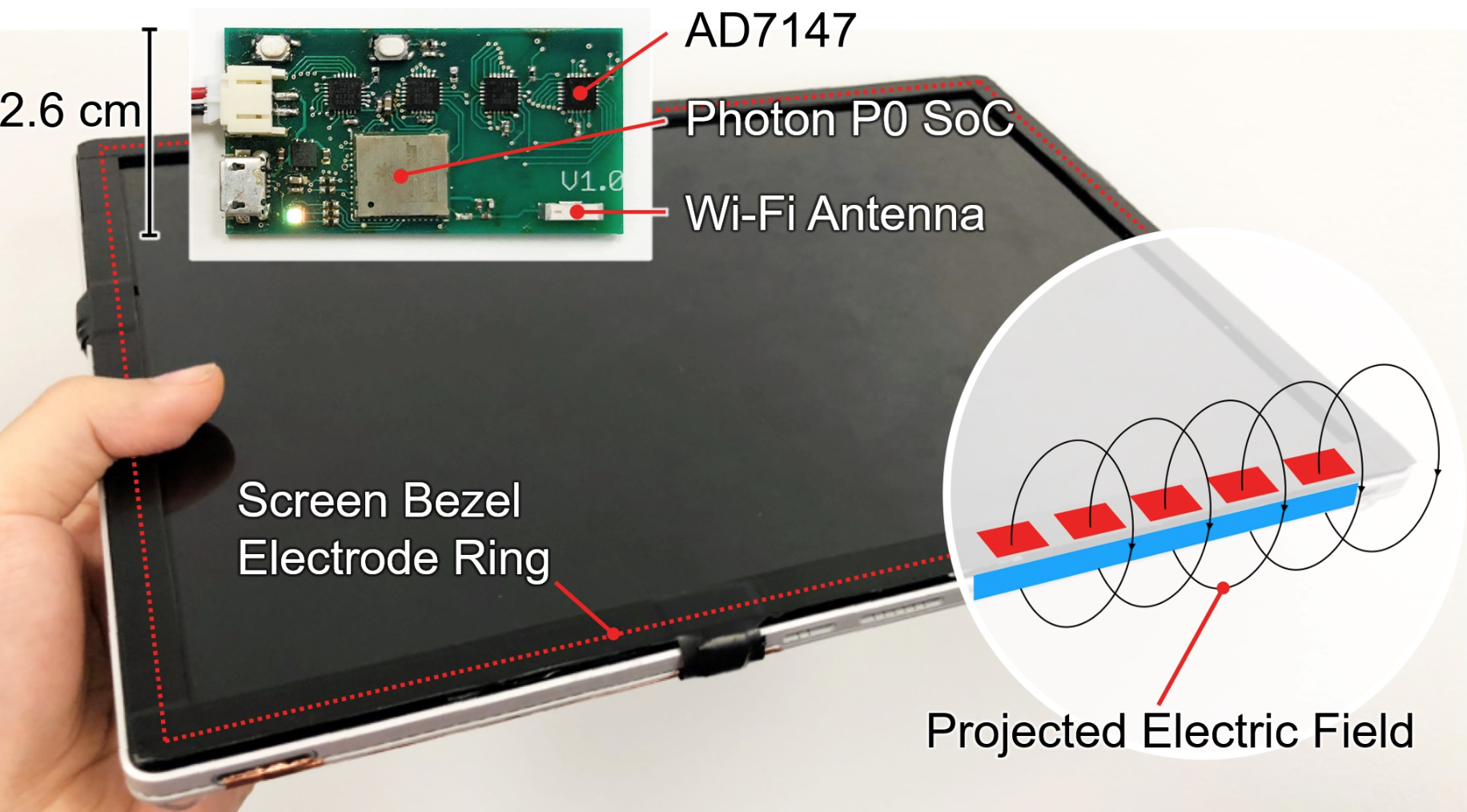

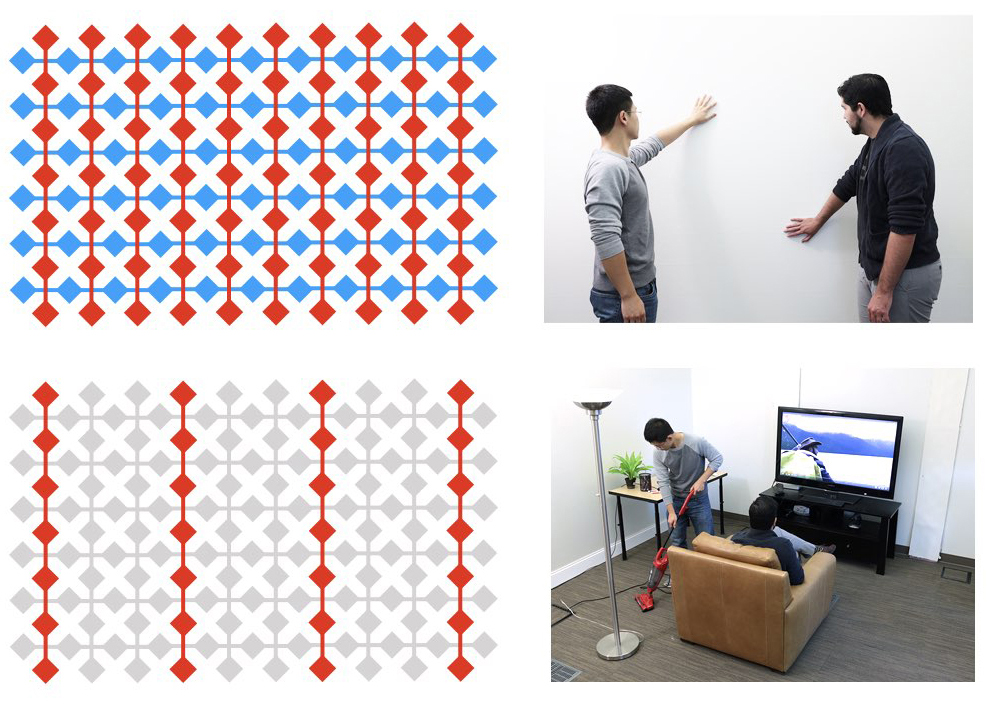

Wall++: Room-Scale Interactive and Context-Aware Sensing

Y Zhang, C Yang, S E. Hudson, C Harrison and A Sample (CHI 2018)

[Video]

[DOI]

[PDF]

Best Paper Award

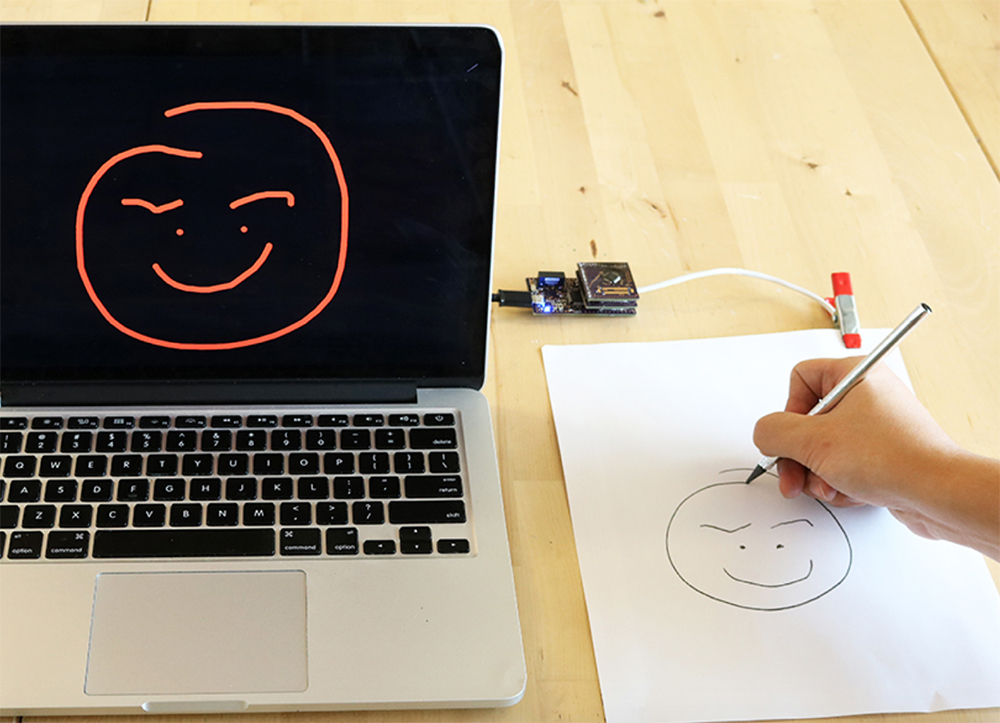

Pulp Nonfiction: Low-Cost Touch Tracking for Paper

Pyro: Thumb-Tip Gesture Recognition Using Pyroelectric Infrared Sensing

J Gong, Y Zhang, X Zhou and XD Yang (UIST 2017)

[Video]

[DOI]

[PDF]

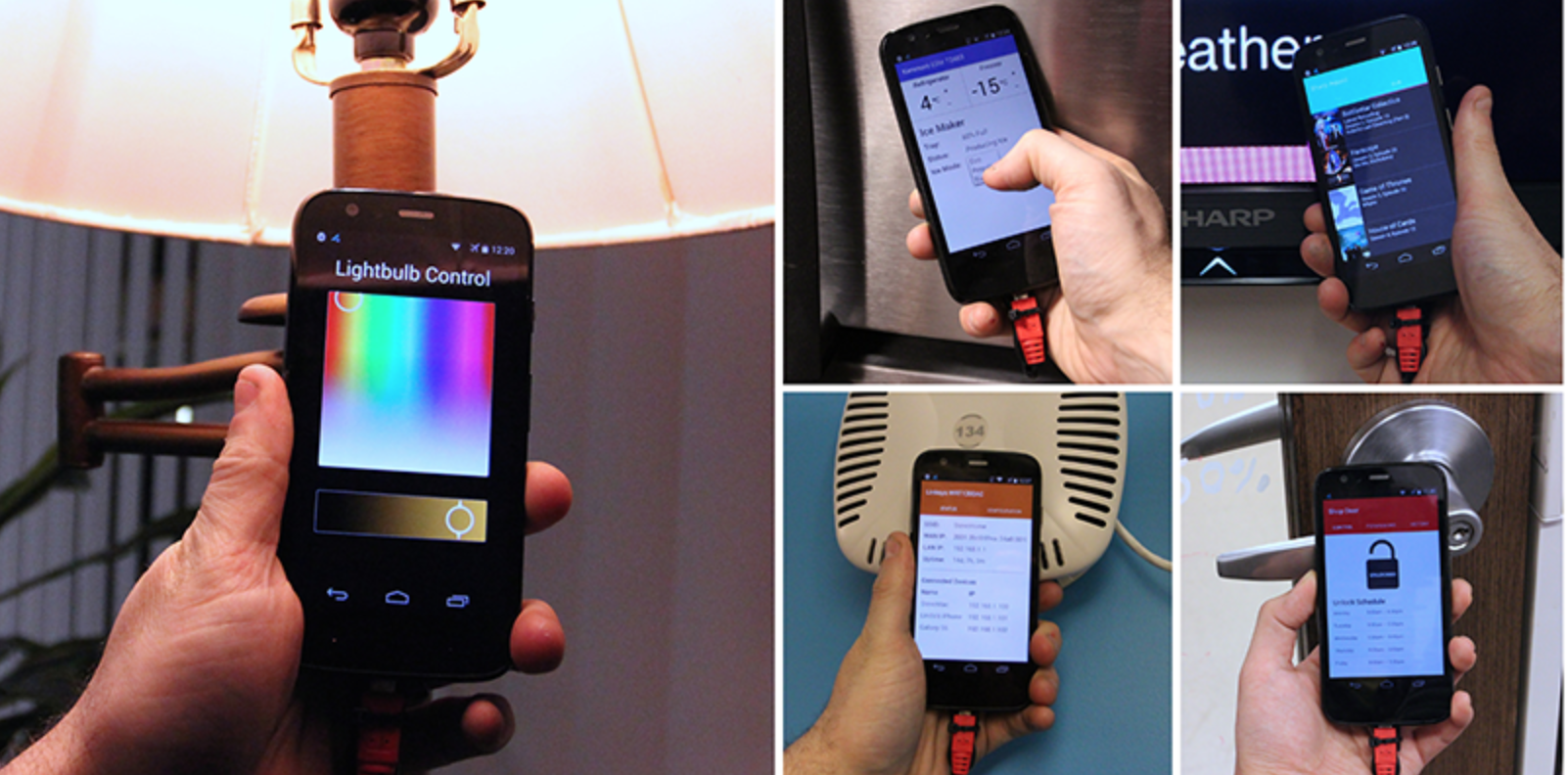

Synthetic Sensors: Towards General-Purpose Sensing

G Laput, Y Zhang and C Harrison (CHI 2017)

[Video]

[DOI]

[PDF]

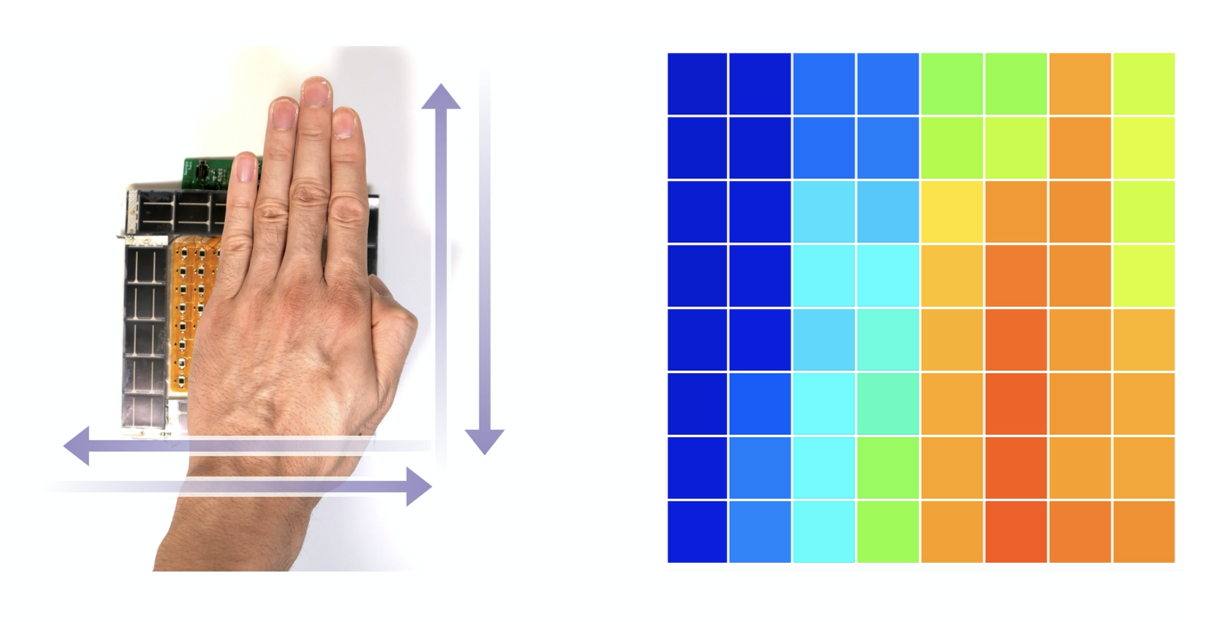

Electrick: Low-Cost Touch Sensing Using Electric Field Tomography

Y Zhang, G Laput and C Harrison (CHI 2017)

[Video]

[DOI]

[PDF]

Deus EM Machina: On-Touch Contextual Functionality for Smart IoT Appliances

R Xiao, G Laput, Y Zhang and C Harrison (CHI 2017)

[Video]

[DOI]

[PDF]

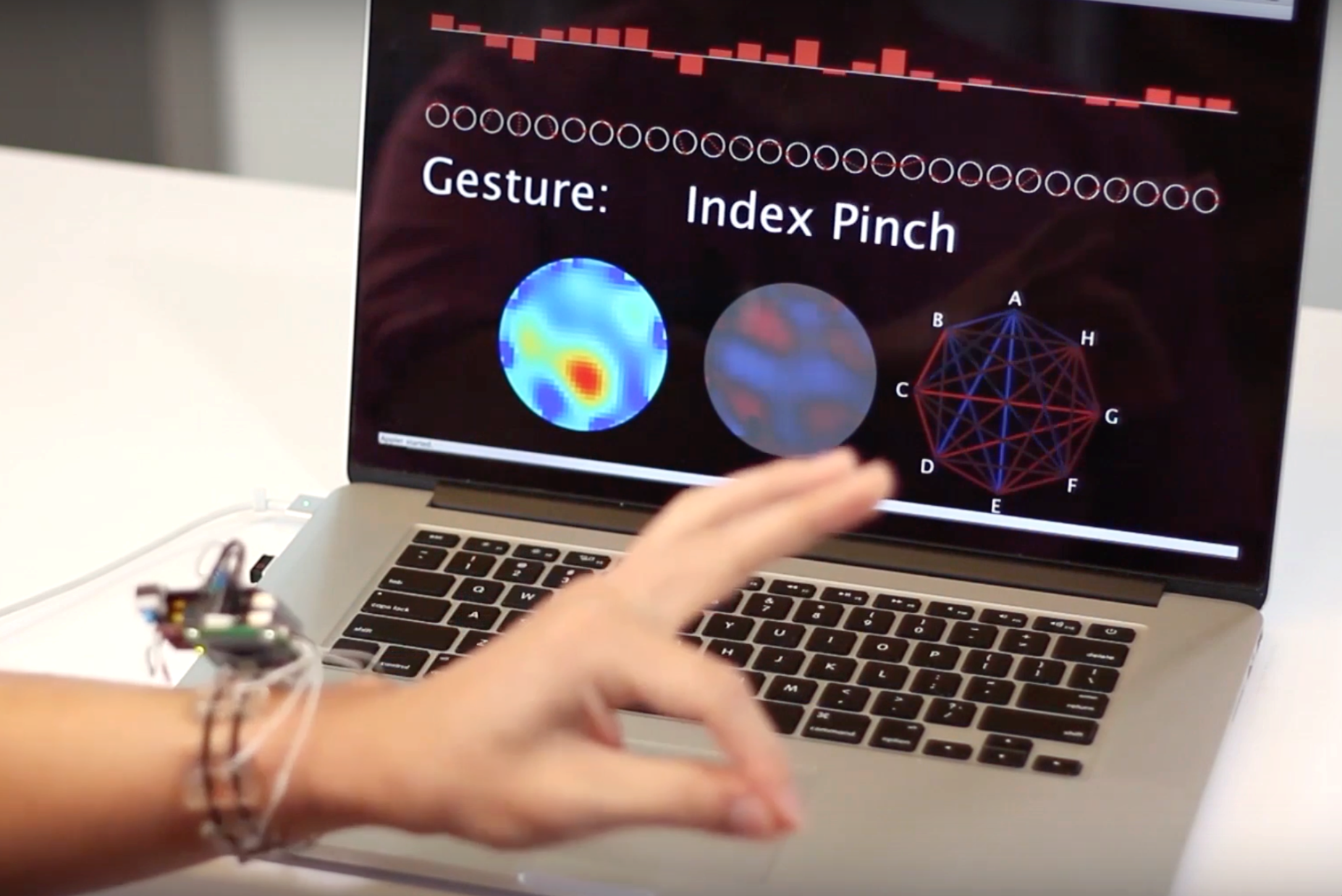

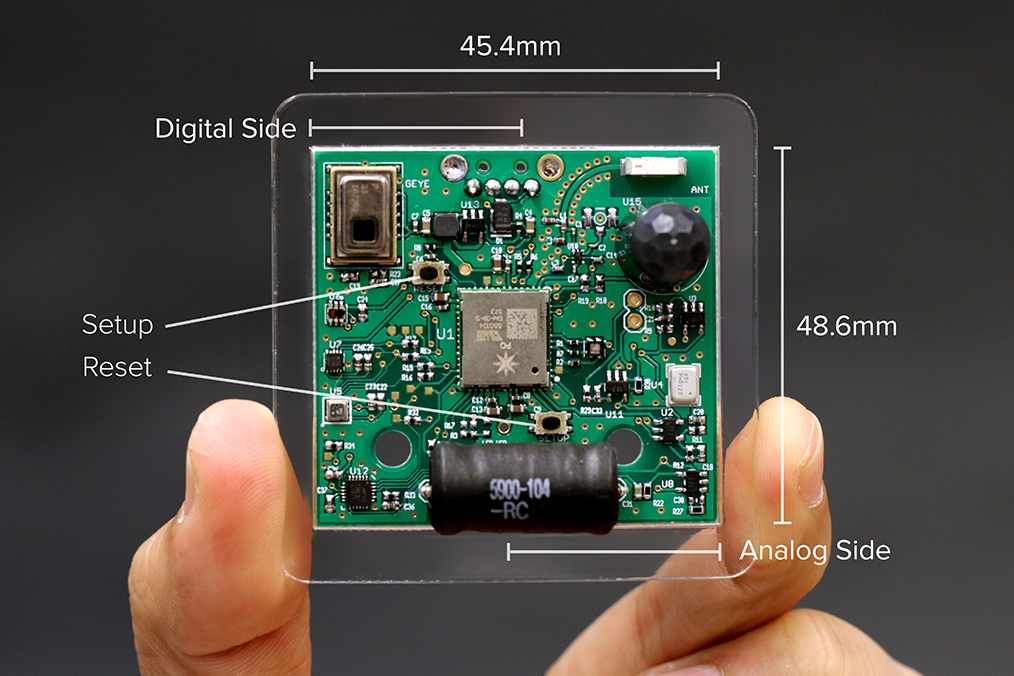

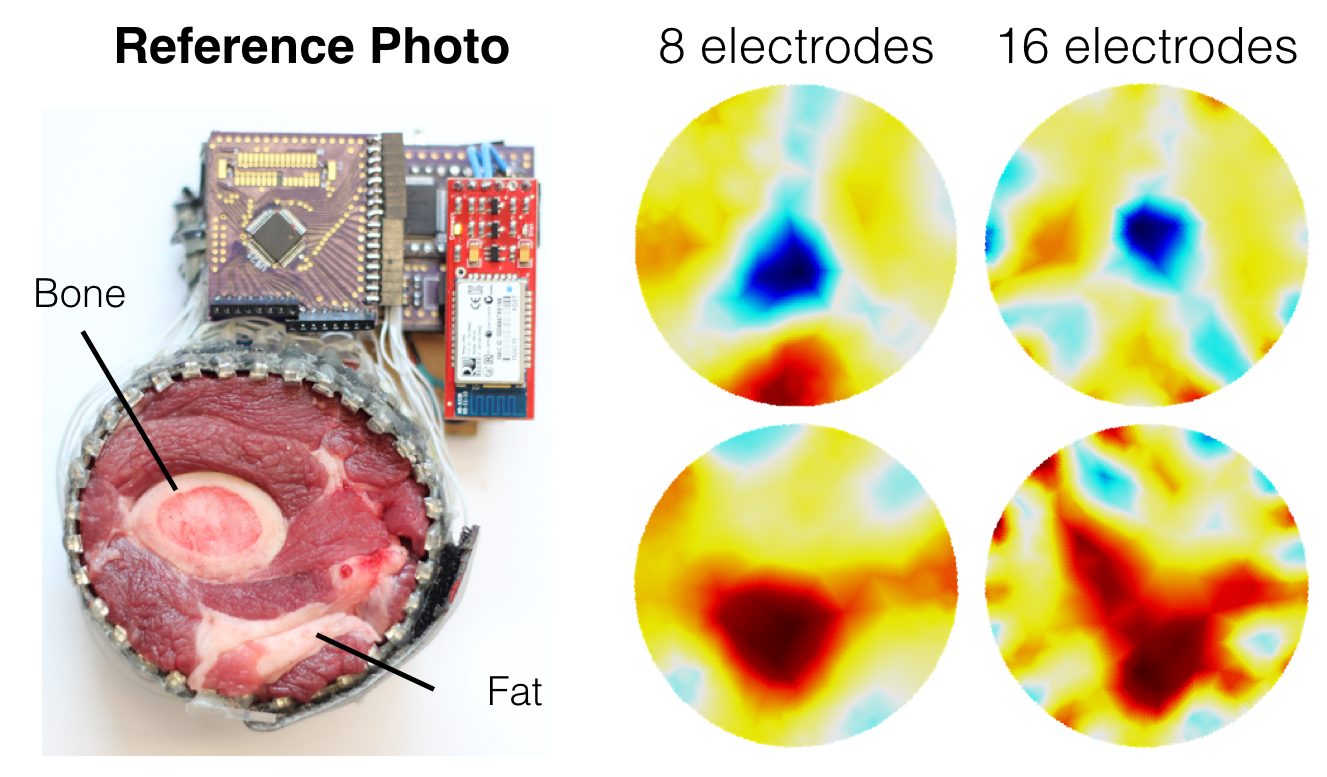

Advancing Hand Gesture Recognition with High Resolution Electrical Impedance Tomography

Y Zhang, R Xiao and C Harrison (UIST 2016)

[Video]

[DOI]

[PDF]

[PCB]

AuraSense: Enabling Expressive Around-Smartwatch Interactions with Electric Field Sensing

J Zhou, Y Zhang, G Laput and C Harrison (UIST 2016)

[Video]

[DOI]

[PDF]

PhD Students

Teaching

- 2026 spring 100 - Electrical and Electronic Circuits

- 2025 spring 100 - Electrical and Electronic Circuits

- 2025 winter 10 - Circuit Theory I

- 2024 fall 209AS - Engineer Interactive System

- 2024 spring 100 - Electrical and Electronic Circuits

- 2024 winter 10 - Circuit Theory I

- 2023 fall 209AS - Engineer Interactive Systems

- 2023 spring 100 - Electrical and Electronic Circuits

- 2023 winter 209AS - Engineer Interactive Systems

- 2022 fall 188 - Engineer Interactive Systems

- 2022 spring 209AS - Engineer Interactive Systems

- 2022 winter 100 - Electrical and Electronic Circuits

Awards

- 2025 Google Research Scholar Award

- 2025 CHI Honorable Mention Award for T2IRay

- 2024 CHI Honorable Mention Award for Watch Your Mouth

- 2023 IMWUT Distinguished Paper Award for Mites

- 2022 UIST Best Demo Honorable Mention for ForceSight

- 2022 CHI Honorable Mention Award for Hand Interfaces

- 2021 IMWUT Distinguished Paper Award for OptoSense

- 2020 CHI Best Paper Award for Wireality

- 2019 CHI Honorable Mention Award for Posture-Aware Pen+Touch Interactions

- 2019 CHI Honorable Mention Award for Interferi

- 2018 CHI Best Paper Award for Wall++

- 2018 UIST Honorable Mention Award for Vibrosight

- 2018 Fast Company Innovation by Design Award Finalist for LumiWatch

- 2017 Qualcomm Innovation Fellowship Winner

- 2017 Fast Company Innovation by Design Award Finalist for Synthetic Sensors

- 2016 CHI Honorable Mention Award for SkinTrack

- 2015 ISS Best Short Paper for Quantifying the Benefit of On-Screen Electrostatic Haptic

Service

Subcommittee Chair CHI'25-26

Program Committee: CHI'20-24, UIST'21-24,'26

Associate Editor: IMWUT'23-26

Press

- NSF News: 'Fitbit for the face' can turn any face mask into smart monitoring device

- Forbes: ‘Smart Face Masks’— Researchers Have Created A Way To Digitize Masks

- Daily Bruin: Researchers collaborate in development of smart mask for health care workers

- Wevolver: Smart Face Mask Platform in Response to COVID-19 Pandemic

- New Atlas: Wireality offers a novel way to let you "feel" complex objects in VR

- Engadget: This VR system tethers your hands to your shoulders to improve haptics

- TechCrunch: This robot uses lasers to ‘listen’ to its environment

- Hackaday: Vibrosight hears when you are sleeping. It knows when you're awake

- Fast Company: Turn Your Wall Into A Touch Screen For $20

- NBC News: New smart wall lets you control your home with swipes, taps

- Engadget: Touch-sensitive wall might let you control home devices in the future

- Digital Trends: This conductive paint transforms regular walls into giant touchpads

- The Verge: You may soon be able to control your home with a smart wall

- Architect Magazine: Transforming Walls into Smart Surfaces

- Science Magazine: Watch researchers turn a wall into Alexa’s eyes and ears

- MIT Technology Review: A Cheap, Simple Way to Make Anything a Touch Pad

- New Scientist: Spray-on touch controls give an interactive twist to any surface

- The Wall Street Journal: How to Turn Anything into a Touchpad

- The Verge: Electrick lets you spray touch controls onto any object or surface

- Engadget: Get ready to 'spray' touch controls onto any surface

- CNET: Almost anything can become a touchpad with some spray paint

- Popular Science: What a Jell-O brain tells us about the future of human-machine interaction

- Gizmodo: Scientists Figure Out How to Turn Anything Into a Touchscreen Using Conductive Spray Paint

- TechCrunch: New technique turns anything into a touch sensor

- Pittsburgh Post-Gazette Touch-sensing technology born of CMU researchers grabs companies' interest

- TechCrunch: Google-funded ‘super sensor’ project brings IoT powers to dumb appliances

- MIT Technology Review: Use Your Arm as a Smart-Watch Touch Pad

- The Verge: New tech turns your skin into a touchscreen for your smartwatch

- Engadget: Navigate your smartwatch by touching your skin

- Gizmodo: This New 'Skinterface' Could Make Smartwatches Suck Less

- CNET: SkinTrack turns your entire forearm into a smartwatch touchpad

- WIRED: SkinTrack Turns Your Arm Into a Touchpad

- Gizmodo: This Smartwatch Detects Gestures By Watching the Muscles Inside Your Arm Move

- Hackaday: Impedance Tomography is the new X-ray Machine

- New Scientist: No-touch smartwatch scans the skin to see the world around you